|

Agelos Kratimenos I'm a PhD student at the GRASP Lab of the University of Pennsylvania, advised by Kostas Daniilidis. Previously, I completed my BSc & MEng in Electrical and Computer Engineering at the National Technical University of Athens (NTUA), where I was honored to work with Petros Maragos.

|

|

News:07/2024 - 10/2024: Completed an internship in Amazon Lab 126, Sunnyvale, California working on Human Avatar Novel Pose Rendering and Gaussian Splatting! |

ResearchMy research interests are in the area of 3D computer vision and specifically in Dynamic neural rendering, motion decomposition, tracking, and human reconstruction. |

|

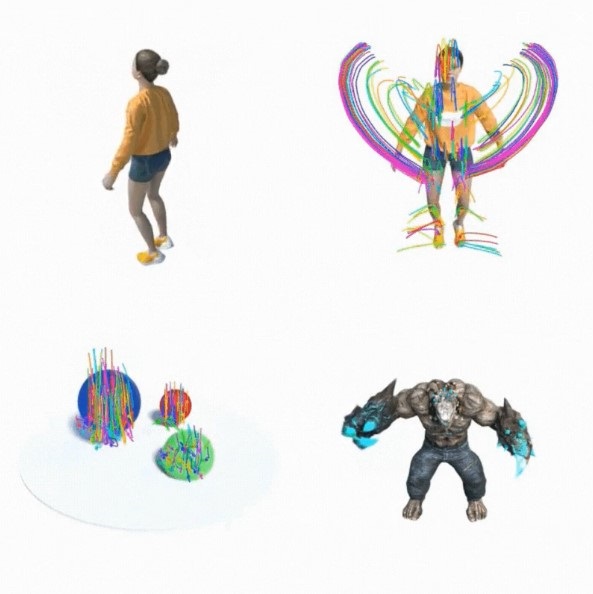

DynMF: Neural Motion Factorization for Real-time Dynamic View Synthesis with 3D Gaussian Splatting

Agelos Kratimenos, Jiahui Lei, Kostas Daniilidis, ECCV, 2024 project page / arXiv / Github DynMF is a sparse trajectory decomposition that enables robust per-point tracking. In addition to NVS, it allows us to control trajectories, enable/disable them, leading to new ways of video editing, dynamic motion decoupling, and novel motion synthesis. |

|

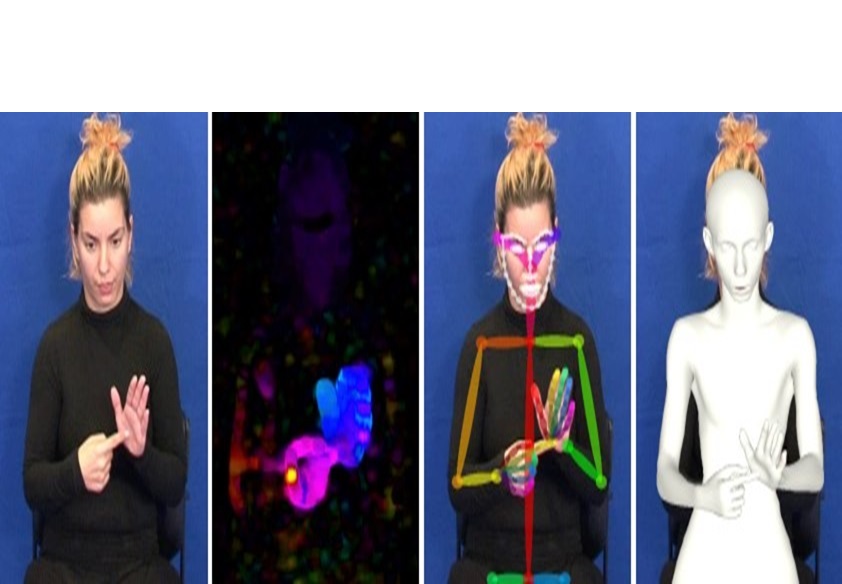

Independent Sign Language Recognition with 3D Body, Hands, and Face Reconstruction

Agelos Kratimenos, Georgios Pavlakos, Petros Maragos, ICASSP, 2021 arXiv We employ SMPL-X, a contemporary parametric model that enables joint extraction of 3D body shape, face and hands information from a single image. We use this holistic 3D reconstruction for the Sign Language Recognition task. |

|

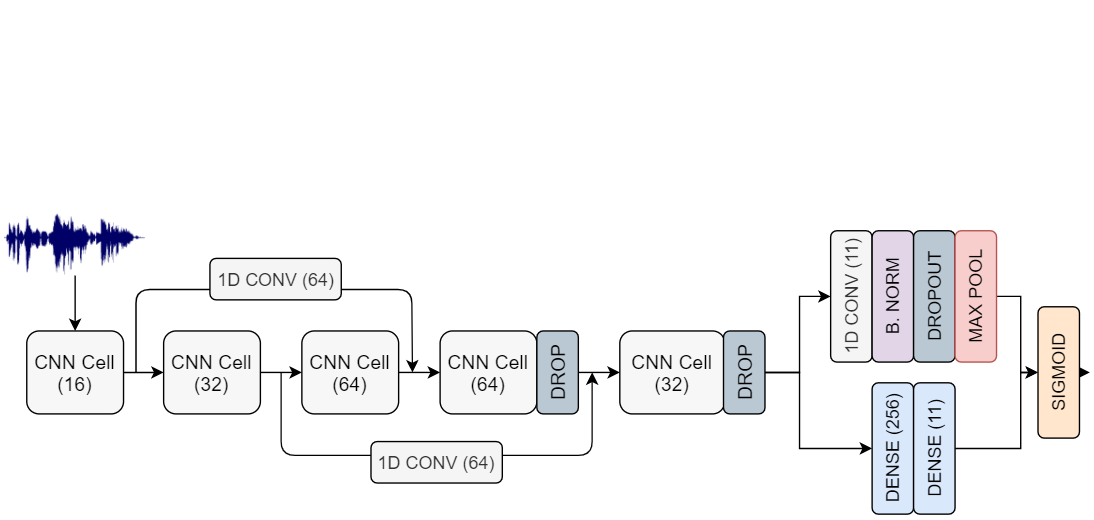

Deep Convolutional and Recurrent Networks for Polyphonic Instrument Classification from Monophonic Raw Audio Waveforms

Kleanthis Avramidis*, Agelos Kratimenos*, Christos Garoufis, Athanasia Zlatintsi, Petros Maragos, ICASSP, 2021 arXiv we attempt to recognize musical instruments in polyphonic audio by only feeding their raw waveforms into deep learning models. Various recurrent and convolutional architectures incorporating residual connections are examined and parameterized in order to build end-toend classifiers with low computational cost and only minimal preprocessing. |

|

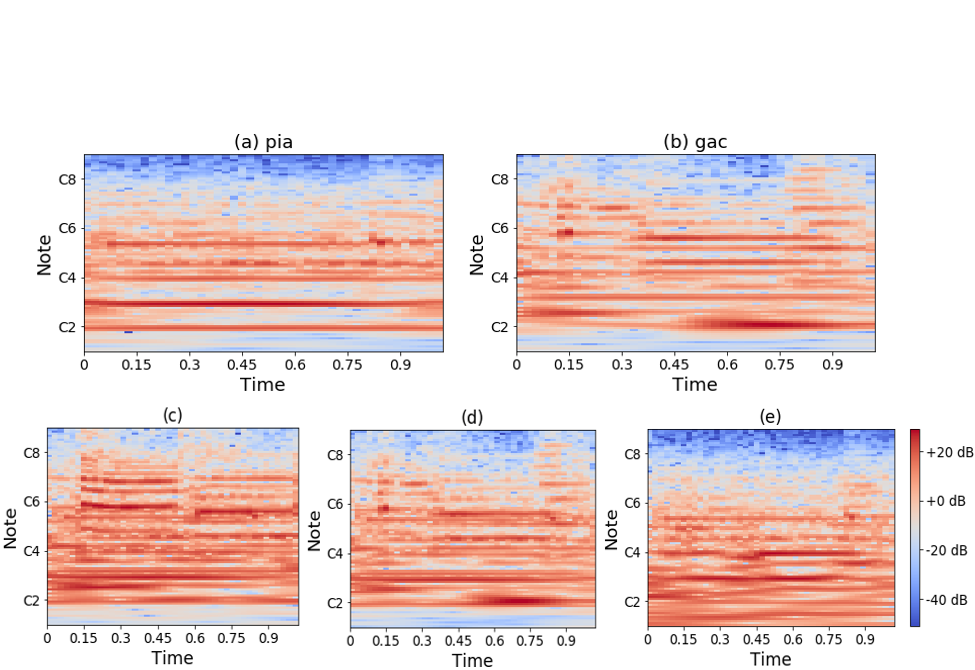

Augmentation Methods on Monophonic Audio for Instrument Classification in Polyphonic Music

Agelos Kratimenos*, Kleanthis Avramidis*, Christos Garoufis, Athanasia Zlatintsi, Petros Maragos, EUSIPCO, 2020 arXiv / Github We present an approach for instrument classification in polyphonic music using monophonic training data that involves mixing-augmentation methods. Specifically, we experiment with pitch and tempo-based synchronization, as well as mixes of tracks with similar music genres. |

|

Another Jon Barron's website. |